Introducing Ares - The Fastest Way to Decode Anything

Or how rewriting a popular Python CLI tool in Rust increased our performance by 8445%

What is Ares?

Ares is the next generation of automated cipher solvers. Say you have some encrypted text such as LJIVE222KFJGUWSRJZ2FUUKSNNNFCTTLLJIVE5C2KFJGWWSRKJVVUUKOORNFCUTLLJIVE222KFHHIWSRKJVVUUKSNNNEOUTULJIU4222KFJGWWSRJZ2FUUKONNNFCTTKLJIU45C2KFJGWWSHJZVVUR2SORNFCUTLLJIVE222I5JHIWSRKJVVUR2ONJNEOTTULJIVE222KFJGWWSRJZ2FUUKSNNNFCTTLLJIU45C2KFHGWWSRJZVFUUKSHU======. You're not sure what the plaintext is or what dark magic was used to create this montrosity.

Input it into Ares and...

Ares knows many different forms of encodings and encryptions and essentially bruteforces them all (but smartly) to find the plaintext.

There are 3 main parts to this:

- The Decoders - These are the things that, given encoded text, decode it using the right schematic.

- The Checkers - Once we've decoded text, how do we know that the text is the plaintext? Checkers "check" the text to see if it matches something (English language, IP Address, etc).

- The Searcher - Bruteforcing everything is nice, but it could be better. Our search algorithm determines the best route to take while searching for the plaintext.

Why differ from Ciphey?

First, a history of Ciphey.

Ciphey 1.0

Ciphey had many, many problems from its conception all the way back in 2008 when I first started working on it. I was a child back then (literally!) and did not know Python very well.

It initially started out as a very large file, the only checker was a simple English dictionary checking solution. It had no search algorithm, it supported very few decoders and it certainly wasn't open source (I had not heard of GitHub at the time!)

It was cleaned up a little a few years later (maybe 2012) and published to GitHub, but not much changed.

Ciphey 2.0

In 2019 I had the chance to work on a project over the summer, encouraged by a summer school I attended.

I decided to revive Ciphey! This time:

- It was open source

- It could decode multiple levels

- The English checker was a bit more advanced.

About a year later, I had the summer free again and decided to do more work on Ciphey. I told a classmate about it and we set out to work on it, which is where Ciphey is today.

Advanced search algorithms, better checkers, and more decoders. The code everyone uses and loves today.

However, it is not without its problems...

- C++ Core which we can't get rid of, or upgrade, since none of the current team knows C++ well enough.

- Many logic bugs exist in Python and it is hard to find them unless someone else tells us about them.

- Very, very few tests or documentation :(

- It is very hard to add any new features as it is all spaghetti code, combined with few tests and no documentation it is next to impossible to figure out how to do things.

- No real multi-core support in Python so it would always be bound to a single core.

In the end, I declared Ciphey dead and wanted to work on something else. I decided that recreating Ciphey in Rust was our best option.

What makes Ares so great?

Very, very fast

Ares is super fast. Previously, based on my Expert Opinion™️ Ciphey could maybe do ~5 - 20 decodings / a second.

How was Ciphey's decodings per second benchmarked?

We gave Ciphey a string base64 encoded 42 times, it took 2 seconds to finish which is about ~20 decodings a second.

Something important to note is that Ciphey's search algorithm "learns" that it's likely base64 all the way down, so it then attempts it all the way down.

This makes it much quicker than if it were to attempt Caesar cipher, Viginere etc instead. Therefore I approximate Ciphey is probably between 5 - 20 decodings a second, and we'll go with 20 since it's more impressive.

Ares can do... Well.

....

🥳 Ares has decoded 103 times times.

./ares -t 'IWRscm9XICxvbGxlSA==' -d 0.06s user 0.01s system 100% cpu 0.073 total

...103 times in 0.06 seconds, which is about 1709 decodings / second. Obviously this will change as the program evoles and it depends on the system it's ran on. But currently with 16 decoders, 2 checkers and a boring Breadth-First Search on an Apple M2 it's going this fast for me.

How was Ares' Decodings Per Second benchmarked?

Ares cannot crack the base64 encoded 42 times string in an appropriate amount of time, due to the search algorithm and also due to decoders not failing when they should be (thus increasing cardinality exponentially).

Ares, can, however measure decoders per second a little better than my guess work for Ciphey.

Because Ares is breadth first search, all of the decoders per level are executed.

Decoders can actually return multiple decodings (Caesar can return 25, for example).

Ares keeps track of what level it's at, so when we print how many decodings are done it does:

let decoded_times_int = depth * (decoders.components.len() as u32 + 25 + 25);

This is how many levels it's done, multiplied by how many decodings happen per level (the 25 is for Caesar and Binary Decoder, which are the only ones which do 25 decodings).

This benchmark was performed with the string "IWRscm9XICxvbGxlSA==".

This string is encoded Reverse -> Base64, which means Ares would need to decode Base64 first and then reverse.

Bcause of breadth first search, Ares will always try Reverse first (thanks to this PR) and it will always complete a full level before moving onto the next level.

Therefore for Ares to fully decode this string, it needs to do 1 whole level (102 decodings) + execute the first decoder (reverse) (1 decoder). Which results in 83 total decodings.

⚠️ Ares is muilti-threaded, so 103 decodings is actually the lower limit for this. It is very likely Ares tried more decoders on the second level than just reverse.

To calculate the absolute minimum amount of decoders Ares ran I used this formula:

103 times in 0.06 seconds. 1 second / 0.06 = 16.6. 103 * 16.6 = 1709 decodings / second

🥳 Ares Minimum Decoders / second: 1709

🫢 Ciphey maximum decoders / second: ~20

Library First

Ares is library first, whereas Ciphey was CLI first with a library tacked on that had no tests and no one used.

In Ares, the CLI is a program which uses the library. That means you can integrate Ares into a lot of things with no issues.

With Ciphey we had a lot of ideas (a website, an API, a Discord bot) and while we could get it to work, it wasn't very nice....

Timers

If you gave some encrypted text to Ciphey, logically one of 2 things would happen:

- It found the plaintext and returned.

- It doesn't find the plaintext and because the search plane is infinite, it would run forever.

Many people didn't understand (2) and assumed it was broken, because of this Ares has a built in timer which sstops the program after X time.

Lemmeknow is fast

One of the biggest additions to Ciphey was PyWhat, a library full of regex to identify data.

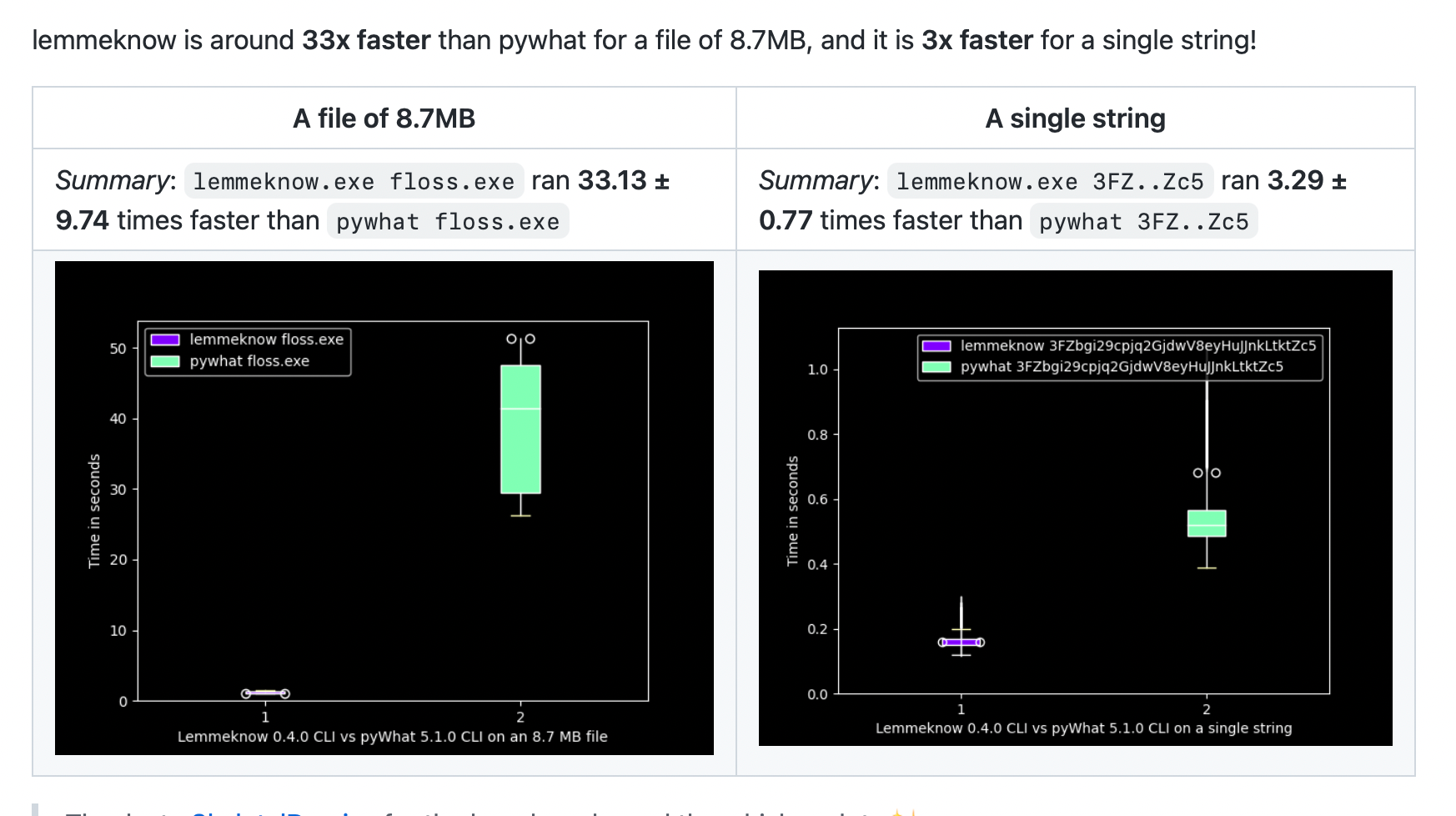

PyWhat has been rewritten into Rust as LemmeKnow which is 3300% faster than the Python version for large files, and 300% faster for smaller strings.

This means that the entire program has sped up significantly ⚡️

Multi-threading

Ciphey did not support multi-threading, mostly due to issues with Python. Ares natively supports it with Rayon, one of the fastest multi-threading libraries out there.

Downsides

It feels slow

I believe the currently implemented Ares is quite slow. There's 3 major things we can do to speed it up:

- Proper use of lifetimes. We

.clone()a lot instead of correctly passing references around. There's a lot of small minor details like this we adopted to ship faster we can clean up to gain some speed. - Not all decoders who can fail, fail. There are many decoders in Ares (like Base64) which do not always fail. As in, in Python if you decode something with Base64 and it does not decode 100% correctly it will fail. In Rust, the library will still give you back some data. This means that there is high cardinality, everytime we receive back "bad" data it exponentially multiplies so we have to search more, which means it takes longer to do things.

- The search algorithm is extremely basic. Ciphey uses a smarter AI-based one which chooses the most likely + fastest decoders, and can follow paths all the way down (so if you try to decode Base64 10 times, Ciphey will 'learn' and only try Base64 for a while). Whereas Ares is breadth-first search, it tries everything equally.

While Ares beats Ciphey at pure decodings / second speed, Ciphey's use of an advanced search algorithm and failing decodings often means it appears faster in terms of "how many seconds does it take to get the plaintext".

Not as many decoders

Because Ares is new™️ and in Rust, it currently doesn't support as many decoders as Ciphey does :(

How you can contribute

We have a bunch of Good First Issues that we need help with <3

Alternatively you can implement some decoders, we list all of the ones we do and don't have here: